Since the November 2016 presidential election, coverage of “fake news” has been everywhere. It’s hard to turn on the TV without hearing the term. Google and Facebook have pitched plans for fighting the menace.1 State legislators have even introduced bills to mandate K–12 instruction on the topic.2

Fake news is certainly a problem. Sadly, however, it’s not our biggest. Fact-checking organizations like Snopes and PolitiFact can help us detect canards invented by enterprising Macedonian teenagers,3 but the Internet is filled with content that defies labels like “fake” or “real.” Determining who’s behind information and whether it’s worthy of our trust is more complex than a true/false dichotomy.

For every social issue, there are websites that blast half-true headlines, manipulate data, and advance partisan agendas. Some of these sites are transparent about who runs them and whom they represent. Others conceal their backing, portraying themselves as grassroots efforts when, in reality, they’re front groups for commercial or political interests. This doesn’t necessarily mean their information is false. But citizens trying to make decisions about, say, genetically modified foods should know whether a biotechnology company is behind the information they’re reading. Understanding where information comes from and who’s responsible for it are essential in making judgments of credibility.

The Internet dominates young people’s lives. According to one study, teenagers spend nearly nine hours a day online.4 With optimism, trepidation, and, at times, annoyance, we’ve witnessed young people’s digital dexterity and astonishing screen stamina. Today’s students are more likely to learn about the world through social media than through traditional sources like print newspapers.5 It’s critical that students know how to evaluate the content that flashes on their screens.

Unfortunately, our research at the Stanford History Education Group demonstrates they don’t.* Between January 2015 and June 2016, we administered 56 tasks to students across 12 states. (To see sample items, go to http://sheg.stanford.edu.) We collected and analyzed 7,804 student responses. Our sites for field-testing included middle and high schools in inner-city Los Angeles and suburban schools outside of Minneapolis. We also administered tasks to college-level students at six different universities that ranged from Stanford University, a school that rejects 94 percent of its applicants, to large state universities that admit the majority of students who apply.

When thousands of students respond to dozens of tasks, we can expect many variations. That was certainly the case in our experience. However, at each level—middle school, high school, and college—these variations paled in comparison to a stunning and dismaying consistency. Overall, young people’s ability to reason about information on the Internet can be summed up in two words: needs improvement.

Our “digital natives”† may be able to flit between Facebook and Twitter while simultaneously uploading a selfie to Instagram and texting a friend. But when it comes to evaluating information that flows through social media channels, they’re easily duped. Our exercises were not designed to assign letter grades or make hairsplitting distinctions between “good” and “better.” Rather, at each level, we sought to establish a reasonable bar that was within reach of middle school, high school, or college students. At each level, students fell far below the bar.

In what follows, we describe three of our assessments.6 Our findings are troubling. Yet we believe that gauging students’ ability to evaluate online content is the first step in figuring out how best to support them.

Assessments of Civic Online Reasoning

Our tasks measured three competencies of civic online reasoning—the ability to evaluate digital content and reach warranted conclusions about social and political issues: (1) identifying who’s behind the information presented, (2) evaluating the evidence presented, and (3) investigating what other sources say. Some of our assessments were paper-and-pencil tasks; others were administered online. For our paper-and-pencil assessments, we used screenshots of tweets, Facebook posts, websites, and other content that students encounter online. For our online tasks, we asked students to search for information on the web.

Who’s Behind the Information?

One high school task presented students with screenshots of two articles on global climate change from a national news magazine’s website. One screenshot was a traditional news story from the magazine’s “Science” section. The other was a post sponsored by an oil company, which was labeled “sponsored content” and prominently displayed the company’s logo. Students had to explain which of the two sources was more reliable.

Native advertisements—or ads craftily designed to mimic editorial content—are a relatively new source of revenue for news outlets.7 Native ads are intended to resemble the look of news stories, complete with eye-catching visuals and data displays. But, as with all advertisements, their purpose is to promote, not inform. Our task assessed whether students could identify who was behind an article and consider how that source might influence the article’s content. Successful students recognized that the oil company’s post was an advertisement for the company itself and reasoned that, because the company had a vested interest in fossil fuels, it was less likely to be an objective source than a news item on the same topic.

We administered this task to more than 200 high school students. Nearly 70 percent selected the sponsored content (which contained a chart with data) posted by the oil company as the more reliable source. Responses showed that rather than considering the source and purpose of each item, students were often taken in by the eye-catching pie chart in the oil company’s post. Although there was no evidence that the chart represented reliable data, students concluded that the post was fact-based. One student wrote that the oil company’s article was more reliable because “it’s easier to understand with the graph and seems more reliable because the chart shows facts right in front of you.” Only 15 percent of students concluded that the news article was the more trustworthy source of the two. A similar task designed for middle school students yielded even more depressing results: 82 percent of students failed to identify an item clearly marked “sponsored content” as an advertisement. Together, findings from these exercises show us that many students have no idea what sponsored content means. Until they do, they are at risk of being deceived by interests seeking to influence them.

Evaluating Evidence

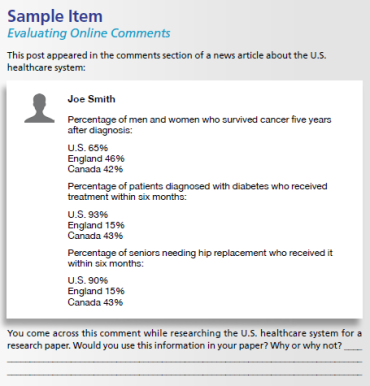

A task for middle school students tapped their ability to evaluate evidence. The Internet is filled with all kinds of claims—some backed by solid evidence and others as flimsy as air. Such claims abound in the comment sections of news articles. As online news sites have proliferated, their accompanying comment sections have become, as it were, virtual town halls, where users not only read, but debate, challenge, react, and engage publicly with fellow commenters. Our exercise assessed students’ ability to reason about the factors that make an online comment more or less trustworthy (see Sample Item below).

Students examined a comment posted on a news article about healthcare. We asked if they would use the information in a research paper. To be successful, students needed to recognize that they knew nothing about the commenter, “Joe Smith,” and his motivations for writing. Was he an expert on healthcare policy? Did he work for the Department of Health and Human Services? Adding to the dubiousness of Joe Smith’s comment was the fact that he provided no citation or links to support his claims. Without a sense of his credentials or the source for his statistics, the information he provided was virtually worthless.

Despite the many reasons to be skeptical, more than 40 percent of 201 middle school students said they would use Joe Smith’s information in a research paper. Instead of asking themselves whether the evidence he provided was sound, students saw a match between the information he presented and the topic at hand. They credulously took the numbers he provided at face value. Other students were entranced by the semblance of data in the comment and argued that the many statistics made the information credible. One student wrote that she would use the comment’s information “because the person included statistics that make me think this source is reliable.” Many middle school students, it seems, have an unflinching belief in the value of statistics—regardless of where the numbers come from.

Seeking Additional Sources

Another task tapped students’ ability to investigate multiple sources to verify a claim. Administered online, this task directed college students (as well as a group of Advanced Placement high school students) to an article on minimumwage.com about wages in the Danish and American fast-food industries. The article claimed that paying American workers more would result in increased food prices and unemployment. Students could consult any online source to determine whether the website was a reliable source of information on minimum wage policy.

The article bears all the trappings of credibility. It links to reports by the New York Times and the Columbia Journalism Review. It is published on a professional-looking website that features “Research” and “Media” pages that link to reports and news articles. The “About” page says it is a project of the Employment Policies Institute, “a non-profit research organization dedicated to studying public policy issues surrounding employment growth.” If students follow the link to the institute’s website (www.epionline.org), they encounter an even sleeker site with more research reports.

Indeed, if students never leave minimumwage.com or epionline.org, they are almost guaranteed to remain ignorant of the true authors of the sites’ content. To evaluate the article and the website on which it appears, students needed to leave those two sites and investigate what other sources had to say. If they did so, they likely learned that the institute is “run by a public relations firm that also represents the restaurant industry,” and that the owner of that firm has a record of creating “official-sounding nonprofit groups” to promote information on behalf of corporate clients.8

Fifty-eight college students and 95 Advanced Placement U.S. history students completed this task. A mere 6 percent of college students and 9 percent of high school students identified the true backers of this article. The vast majority—college and high school students alike—accepted the website as trustworthy, citing its links, research, and parent group as reasons to trust it. As one student wrote: “I read the ‘About Us’ page for MinimumWage.com and also for the Employment Policies Institute. The Institute sponsors MinimumWage.com and is a non-profit research organization dedicated to studying policy issues surrounding employment, and it funds nonpartisan studies by economists around the nation. The fact that the organization is a non-profit, that it sponsors nonpartisan studies, and that it contains both pros and cons of raising the minimum wage on its website, makes me trust this source.”

Cloaked sites like epionline.org abound on the web. These professional-looking sites with neutral descriptions advocate on behalf of their parent organizations while actively concealing their true identities and funding. Our task shows how easily students are duped by these techniques.

Where to Go from Here?

Our findings show that many young people lack the skills to distinguish reliable from misleading information. If they fall victim to misinformation, the consequences may be dire. Credible information is to civic engagement what clean air and water are to public health. If students cannot determine what is trustworthy—if they take all information at face value without considering where it comes from—democratic decision-making is imperiled. The quality of our decisions is directly affected by the quality of information on which they are based.

What should we do? A quick survey of resources available on the web shows a surfeit of materials, all of which claim to help students evaluate digital information.

Many of these resources share something in common: they provide checklists to help students decide whether information should be trusted. These checklists range in length from 10 questions to sometimes as many as 30.9 Short or long, checklist approaches tend to focus students on the most easily manipulated surface features of websites: Is a contact person provided for the article? Are sources of information identified? Are there spelling or grammatical errors? Are there banner ads? Does the domain name contain the suffix “.org” (supposedly more reliable than “.com”)?

Even if we set aside the concern that students (and the rest of us) lack the time and patience to spend 15 minutes answering lists of questions before diving into a website, a larger problem looms. Providing an author, throwing up a reference list, and ensuring a site is free of typos hardly establishes it as a credible source. One could contend that in years past, the designation “.org” (for a mission-driven organization) could be trusted more than “.com” (for a profit-driven company), but that’s no longer the case. Practically any organization, legitimate or not, can obtain a “.org” domain name. In an Internet characterized by polished web design, search-engine optimization, and organizations vying to appear trustworthy, such guidelines create a false sense of confidence. In fact, checklists may make students more vulnerable to scams, not less.

The checklist approach falls short because it underestimates just how sophisticated the web has become. Worse, the approach trains students’ attention on the website itself, thus cutting them off from the most efficient route to learning more about a site: finding out what the rest of the web has to say (after all, that’s why we call it a web). In other words, students need to harness the power of the web to evaluate a single node in it. This was the biggest lesson we learned by watching expert fact checkers as they evaluated unfamiliar web content.

We interviewed journalists and fact checkers at some of the nation’s most prestigious news and fact-checking organizations as they vetted online content in real time.10 In parallel, we observed undergraduates at the nation’s most selective university, Stanford, and college professors at four-year institutions in California and Washington state as they completed the same set of online tasks. There were dramatic differences between the fact checkers and the other two groups.

Below, we describe some of the most powerful strategies employed by fact checkers and how educators can adapt them to help our students become savvy web users. (For examples of classroom activities that incorporate these strategies, see the box to the right.)

1. Teach students to read laterally. College students and even professors approached websites using checklist-like behaviors: they scanned up and down pages, they commented on site design and fancy logos, they noted “.org” domain names, and they examined references at the bottom of a web article. They often spent a great deal of time reading the article, evaluating the information presented, checking its internal logic, or comparing what they read to what they already knew. But the “close reading” of a digital source, the slow, careful, methodical review of text online—when one doesn’t even know if the source can be trusted (or is what it says it is)—proves to be a colossal waste of time.

Fact checkers approached unfamiliar content in a completely different way. They read laterally, hopping off an unfamiliar site almost immediately, opening new tabs, and investigating outside the site itself. They left a site in order to learn more about it. This may seem paradoxical, but it allowed fact checkers to leverage the strength of the entire Internet to get a fix on one node in its expansive web. A site like epionline.org stands up quite well to a close internal inspection: it’s well designed, clearly and convincingly written (if a bit short on details), and links to respected journalistic outlets. But a bit of lateral reading paints a different picture. Multiple stories come up in a search for the Employment Policies Institute that reveal the organization (and its creation, minimumwage.com) as the work of a Washington, D.C., public relations firm that represents the hotel and restaurant industries.

2. Help students make smarter selections from search results. In an open search, the first site we click matters. Our first impulse might send us down a road of further links, or, if we’re in a hurry, it might be the only venue we consult. Like the rest of us, fact checkers relied on Google. But instead of equating placement in search results with trustworthiness (the mistaken belief that the higher up a result, the more reliable), as college students tend to do,11 fact checkers understood how easily Google results can be gamed. Instead of mindlessly clicking on the first or second result, they exhibited click restraint, taking their time on search results, scrutinizing URLs and snippets (the short sentence accompanying each result) for clues. They regularly scrolled down to the bottom of the results page, sometimes even to the second or third page, before clicking on a result.

3. Teach students to use Wikipedia wisely. You read right: Wikipedia. Fact checkers’ first stop was often a site many educators tell students to avoid. What we should be doing instead is teaching students what fact checkers know about Wikipedia and helping them take advantage of the resources of the fifth-most trafficked site on the web.12

Students should learn about Wikipedia’s standards of verifiability and how to harvest entries for links to reliable sources. They should investigate Wikipedia’s “Talk” pages (the tab hiding in plain sight next to the “Article” tab), which, on contentious issues like gun control, the status of Kashmir, waterboarding, or climate change, are gold mines where students can see knowledge-making in action. And they should practice using Wikipedia as a resource for lateral reading. Fact checkers, short on time, often skipped the main article and headed straight to the references, clicking on a link to a more established venue. Why spend 15 minutes having students, armed with a checklist, evaluate a website on a tree octopus (www.zapatopi.net/treeoctopus) when a few seconds on Wikipedia shows it to be “an Internet hoax created in 1998”?

While we’re on the subject of octopi: a popular approach to teaching students to evaluate online information is to expose them to hoax websites like the Pacific Northwest Tree Octopus. The logic behind this activity is that if students can see how easily they’re duped, they’ll become more savvy consumers. But hoaxes constitute a miniscule fraction of what exists on the web. If we limit our digital literacy lessons to such sites, we create the false impression that establishing credibility is an either-or decision—if it’s real, I can trust it; if it’s not, I can’t.

Instead, most of our online time is spent in a blurry gray zone where sites are real (and have real agendas) and decisions about whether to trust them are complex. Spend five minutes exploring any issue—from private prisons to a tax on sugary drinks—and you’ll find sites that mask their agendas alongside those that are forthcoming. We should devote our time to helping students evaluate such sites instead of limiting them to hoaxes.

The senior fact checker at a national publication told us what she tells her staff: “The greatest enemy of fact checking is hubris”—that is, having excessive trust in one’s ability to accurately pass judgment on an unfamiliar website. Even on seemingly innocuous topics, the fact checker says to herself, “This seems official; it may be or may not be. I’d better check.”

The strategies we recommend here are ways to fend off hubris. They remind us that our eyes deceive, and that we, too, can fall prey to professional-looking graphics, strings of academic references, and the allure of “.org” domains. Our approach does not turn students into cynics. It does the opposite: it provides them with a dose of humility. It helps them understand that they are fallible.

The web is a sophisticated place, and all of us are susceptible to being taken in. Like hikers using a compass to make their way through the wilderness, we need a few powerful and flexible strategies for getting our bearings, gaining a sense of where we’ve landed, and deciding how to move forward through treacherous online terrain. Rather than having students slog through strings of questions about easily manipulated features, we should be teaching them that the World Wide Web is, in the words of web-literacy expert Mike Caulfield, “a web, and the way to establish authority and truth on the web is to use the web-like properties of it.”13 This is what professional fact checkers do.

It’s what we should be teaching our students to do as well.

Sarah McGrew co-directs the Civic Online Reasoning project at the Stanford History Education Group, where Teresa Ortega serves as the project manager. Joel Breakstone directs the Stanford History Education Group, where his research focuses on how teachers use assessment data to inform instruction. Sam Wineburg is the Margaret Jacks Professor of Education at Stanford University and the founder and executive director of the Stanford History Education Group.

*The Stanford History Education Group offers free curriculum materials to teachers. Our curriculum and assessments have more than 4 million downloads. We initiated a research program about students’ civic online reasoning when we became distressed by students’ inability to make the most basic judgments of credibility. (back to the article)

†For more about the myth of “digital natives,” see “Technology in Education” in the Spring 2016 issue of American Educator. (back to the article)

Endnotes

1. Matthew Ingram, “Facebook Partners with Craigslist’s Founder to Fight Fake News,” Fortune, April 3, 2017, www.fortune.com/2017/04/03/facebook-newmark-trust.

2. Alexei Koseff, “Can’t Tell If It’s Fake News or the Real Thing? California Has a Bill for That,” Sacramento Bee, January 12, 2017.

3. Samanth Subramanian, “Inside the Macedonian Fake-News Complex,” Wired, February 15, 2017, www.wired.com/2017/02/veles-macedonia-fake-news.

4. Hayley Tsukayama, “Teens Spend Nearly Nine Hours Every Day Consuming Media,” The Switch (blog), Washington Post, November 3, 2015, www.washingtonpost.com/news/the-switch/wp/2015/11/03/teens-spend-nearly….

5. Urs Gasser, Sandra Cortesi, Momin Malik, and Ashley Lee, Youth and Digital Media: From Credibility to Information Quality (Cambridge, MA: Berkman Center for Internet and Society, 2012).

6. Sam Wineburg, Joel Breakstone, Sarah McGrew, and Teresa Ortega, Evaluating Information: The Cornerstone of Online Civic Literacy; Executive Summary (Stanford, CA: Stanford History Education Group, 2016).

7. Jeff Sonderman and Millie Tran, “Understanding the Rise of Sponsored Content,” American Press Institute, November 13, 2013, www.americanpressinstitute.org/publications/reports/white-papers/unders….

8. Eric Lipton, “Fight over Minimum Wage Illustrates Web of Industry Ties,” New York Times, February 10, 2014.

9. See, for example, “Ten Questions for Fake News Detection,” The News Literacy Project, accessed April 11, 2017, www.thenewsliteracyproject.org/sites/default/files/GO-TenQuestionsForFakeNewsFINAL.pdf; “Identifying High-Quality Sites (6–8),” Common Sense Media, accessed April 11, 2017, www.commonsensemedia.org/educators/lesson/identifying-high-quality-sites-6-8; “Who Do You Trust?,” Media Education Lab, accessed April 11, 2017, www.mediaeducationlab.com/sites/default/files/AML_H_unit2_0.pdf; and “Determine Credibility (Evaluating): CRAAP (Currency, Relevance, Authority, Accuracy, Purpose),” Millner Library, Illinois State University, last updated March 21, 2017, https://guides.library.illinoisstate.edu/evaluating/craap.

10. Sam Wineburg and Sarah McGrew, “What Students Don’t Know about Fact-Checking,” Education Week, November 2, 2016; and Sarah McGrew and Sam Wineburg, “Reading Less and Learning More: Expertise in Assessing the Credibility of Online Information” (presentation, Annual Meeting of the American Educational Research Association, April 27–May 1, 2017).

11. Eszter Hargittai, Lindsay Fullerton, Ericka Menchen-Trevino, and Kristin Yates Thomas, “Trust Online: Young Adults’ Evaluation of Web Content,” International Journal of Communication 4 (2010): 468–494.

12. “The Top 500 Sites on the Web,” Alexa, accessed March 20, 2017, www.alexa.com/topsites.

13. Mike Caulfield, “How ‘News Literacy’ Gets the Web Wrong,” Hapgood (blog), March 4, 2017, www.hapgood.us/2017/03/04/how-news-literacy-gets-the-web-wrong.