It is no secret that disadvantaged children are more likely to struggle in school. For decades now, public policy has focused on how to reduce the achievement gap between poorer students and more-affluent students. Despite numerous reform efforts, these gaps remain virtually unchanged—a fact that is deeply frustrating and also a little confusing. It would be reasonable to assume that background inequalities would shrink over the years of schooling, but that is not what we find. At age 18, rather, we find differences that are roughly the same size as we see at age 6.

Does this mean that schools can’t effectively address inequality? Certainly not. One of the key factors driving inequality in schools is unequal opportunity to learn (OTL) mathematics. In previous articles for American Educator and elsewhere, we define OTL as the important yet often overlooked relationship between mathematics performance and exposure to mathematics content.*

As we will explain, it is very unlikely that students will learn material they are not exposed to, and there is considerable evidence that disadvantaged students are systematically tracked into classrooms with weaker mathematics content (e.g., basic arithmetic taught in a so-called algebra class). Rather than mitigating the effects of poverty, many American schools are exacerbating them.

Previous work in this area has been limited by the data available,1 but the most recent Program for International Student Assessment (PISA) study, coordinated by the Organization for Economic Cooperation and Development (OECD), opens up new opportunities for analysis. The 2012 PISA includes student-level measures of mathematics OTL and provides powerful evidence of inequality in OTL and its relationship to student performance. Specifically, the latest PISA data find that:

- There is large variation in exposure to mathematics content;

- OTL is strongly related to student performance; and

- Lower-income students are generally exposed to less-rigorous math.

It’s not just that lower-income students are less well prepared when they enter school; the weakness of their math coursework actually keeps them from catching up.

What is truly fascinating about the PISA results is that this is a global phenomenon. In every country, more exposure to formal math content was related to better math performance, and almost every country showed a statistically significant relationship between student socioeconomic background and OTL. In other words, the problem we identified in the United States turns out to be a problem everywhere.

One interesting finding of PISA was that most of the variation in student performance was within schools rather than between them. Here in the United States, we are accustomed to talking about “good schools” and “failing schools.” According to PISA, this perspective may be overstated. On average, nearly two-thirds of the differences in student achievement in math are found in the same school, not in different schools. Much of this difference resides between classrooms, as students in the same grade cover profoundly different mathematics content—even when their classes share the same course title.2 The United States does stand out, but not how you might expect: here, more like three-quarters of the differences in math achievement are within the same school. The issue appears to be less unequal schools than unequal classrooms.

These findings should make us reconsider our approach to education reform. Educational inequality is not a U.S.-specific problem, but some education systems do a much better job than we do in coping with the effects of poverty. More important, the math content that is taught in the classroom plays a critical role—a fact that has received far too little attention and one that we examine here.

Dispelling PISA Myths

PISA is an international assessment that measures 15-year-old students’ literacy in mathematics, reading, and science. First administered in 2000, PISA is given every three years. The results from the most recent assessment, administered to more than 500,000 students globally in 2012, were released in December 2013. Participating governmental entities were the 34 OECD countries, including the United States, as well as 28 non-OECD countries (and three jurisdictions in China: Hong Kong, Macao, and Shanghai). We focus this article on the PISA mathematics results of the 34 OECD countries.

The results of the latest PISA study of mathematics were quite similar to those of other international assessments: the performance of U.S. students (481) was to a statistically significant degree below the average of other wealthy OECD countries (494) and substantially behind the top-performing countries (such as South Korea at 554). Despite several rounds of education reform, the standing of the United States is pretty much where it was nearly two decades ago.

The response to these results has been familiar, with advocates interpreting them to fit their preconceptions. Some argue that the continuing mediocrity of U.S. students in mathematics is a dire problem requiring major action (which varies based on the ideological predisposition of the speaker), while others explain away these findings by suggesting that international comparisons are unfair because of the greater diversity of American students and/or the greater commitment of the United States to the concept of equalizing educational opportunity for all students.

We can also expect to see that one of the top-performing countries on PISA will become something of an educational fad, with scores of newspaper articles and mounds of policy papers dedicated to understanding the secret of its success—just as previous rounds of PISA have witnessed serial infatuations with Japan, Singapore, and Finland. This isn’t to say nothing has been learned from these countries, of course.†

While certainly understandable, these reactions all rather miss the point. Before digging into what PISA can usefully tell us about mathematics learning in the United States and how we might improve it, let’s first dispel a few misconceptions. First is the long-held belief that the weak-to-middling scores of U.S. mathematics students can be explained by a difference in who takes the test. It’s amazing how often one hears the assertion that other countries only allow their elite students to take PISA while the United States ensures that students from all academic levels are tested.

The reality is that every OECD country participating in PISA or, for that matter, TIMSS (the Trends in International Mathematics and Science Study, another prominent international assessment) must meet very strict requirements in terms of student participation in order to be included. The organizers of the study are extremely sensitive to the problem of sample bias. Without getting too technical, PISA is given to a random sample of all schools in a country, and within each sampled school, to a random sample of all 15-year-olds. The researchers conducting PISA make sure that the students taking the test accurately reflect the whole population of 15-year-old students in each country.

A common misunderstanding of the nature of international test scores often results in an exclusive focus on the “horse race” results of PISA: ranking the nations by their scores and trying to discern which ones are doing well and which are doing poorly. Making such comparisons is tempting and reflects our similar interest in comparing how well countries perform in the Olympics and the World Cup. But country rankings on PISA are not the same thing as comparing win-loss records for sports teams.

As we discuss below, most of the variation in student performance is within countries, not between countries. Yes, affluent students in Japan do better than affluent students in Germany, but the gap between richer and poorer students within either country is far greater than the gap between countries. As a result, comparing cross-country variations rather than rankings based on PISA scores might be the most useful of international comparisons. Comparing a group of higher-performing countries to others on a few key metrics, such as gaps between richer and poorer students, to see what general patterns emerge contributes to a deeper understanding of key educational issues within each country and around the world.

We should also resist the temptation to assume that the U.S. education system has seen no changes in score in the last dozen years. Although the U.S. PISA mathematics ranking is essentially unchanged, there are signs of progress. For example, on the 2003 PISA, the performance of U.S. students was to a statistically significant degree below the OECD average on all four mathematics content subscales: (1) change and relationships, (2) space and shape, (3) quantity, and (4) uncertainty and data.3 Nine years later, the mathematics performance of U.S. students was statistically indistinguishable from the OECD average on two mathematics subscales: change and relationships (which is closely related to algebra), and uncertainty and data (which is closely related to probability and statistics). This represents notable if unspectacular progress. Although we cannot say with certainty, the improved U.S. performance in algebra may be linked to the greater emphasis on algebra topics in state eighth-grade curriculum standards starting about a decade ago.

That said, the United States still has a way to go in ensuring all students are exposed to algebra in eighth grade. As we have written previously, such exposure prepares them for higher levels of math in high school and postsecondary education. According to our research, algebra and geometry are topics taught in eighth grade in virtually all of the countries that participate in TIMSS, but in the United States, there is great variability in what math content students learn in eighth grade. We have found that by international standards, our eighth-grade students are too often taught sixth-grade math content.

The Common Core State Standards in math, however, give us hope in that they resemble the standards of high-achieving countries by exhibiting the key features of coherence, rigor, and focus. The emphasis these new standards place on algebra is also encouraging. For instance, an operations and algebraic-thinking domain for grades kindergarten to 5 lays a foundation for algebra in eighth grade.

A Look at PISA and TIMSS

As we see it, one important benefit of PISA is that its data can be used to draw tentative conclusions about what influences student learning for good or ill. PISA shows us that what students are taught—the content of mathematics instruction—critically influences what students know. Just as important, it reveals that educational opportunities related to the coverage of that content widely vary in every country, and that students from disadvantaged backgrounds are systematically exposed to weaker mathematics content, worsening educational inequality.

Readers of our earlier pieces in American Educator might be thinking that this all sounds familiar, and it should. In those pieces, we wrote about opportunity to learn and about how American schools are characterized by pervasive inequality in OTL—inequality that is strongly associated with student socioeconomic background.

Although the explicit tracking of U.S. high school students has generally diminished, our studies indicate that it is still a very common but often overlooked practice.4 With the most recent PISA results, we now have reason to believe that tracking is not just a problem with American schools but also a global problem.

The foundation for studying OTL internationally is rooted in TIMSS, which allowed us to identify the strong relationship of OTL to student learning more than a dozen years ago.5 But there are limitations to how far an analysis could go using TIMSS data. In TIMSS, the measure of OTL was based on a survey of teachers in a small number of randomly sampled classrooms within each school. The newest PISA, by contrast, asks a random sample of all students at a school, and therefore from multiple classrooms, about the mathematics content to which they had been exposed, whether formal mathematics, applied mathematics, or word problems.

While PISA questions are less extensive than the ones asked in TIMSS, PISA includes questions about a student’s family background, permitting the development of an index of student socioeconomic status (the PISA educational, social, and cultural index) capable of being applied across countries. The advantage of these questions is that we can now study inequalities in OTL and student socioeconomic background, and the relationship between them, in a much more detailed way, one that more fully represents the diversity in schooling within countries.

A further distinction between PISA and TIMSS is in how they define the idea of opportunity to learn. In the original TIMSS (1995), OTL was defined as (1) exposure to mathematics topics, and (2) the amount of time devoted to those topics by teachers. In the latest PISA study, OTL is identified as familiarity with and exposure to a small set of key mathematics topics (much like the list of topics found in TIMSS) as well as real-world applications and word problems. The mathematics topics are mainly those typically found in grades 8 through 12 defining the academic content of the lower- and upper-secondary curriculum. The OECD labels this “formal mathematics.”6 While TIMSS assesses formal mathematics knowledge (including the concepts, skills, algorithms, and problem-solving skills typically covered in schools), PISA assesses mathematics literacy, which is defined by the OECD as “an individual’s capacity to formulate, employ, and interpret mathematics in a variety of contexts. It includes reasoning mathematically and using mathematical concepts, procedures, facts, and tools to describe, explain, and predict phenomena. It assists individuals in recognising the role that mathematics plays in the world and to make the well-founded judgments and decisions needed by constructive, engaged, and reflective citizens.”

The Relationship of OTL to Performance

First, we used PISA to examine how exposure to formal mathematics, applied mathematics, and word problems relates to mathematics literacy (see Table 1 below). A comparison of country averages for these three OTL variables reveals considerable variation across countries on the emphasis placed on each, as measured on a 0 to 3 scale. Among the 33 OECD nations that participated in the study of OTL (Norway did not collect OTL data, while data from France do not permit within-school OTL analysis), Japan and South Korea had the highest average for formal mathematics (2.1) and Sweden the lowest (0.8). Portugal and Mexico averaged 2.2 on applied mathematics compared with the Czech Republic’s 1.6, while Turkey and Greece placed the least emphasis on word problems (1.3) and Iceland the most (2.4). A comparison across countries suggests that those education systems that spent the most time on applied mathematics tend to have lower average PISA scores—a relationship that is statistically significant.

(click image for larger view)

However, as we mentioned earlier, the ranking of countries can be quite misleading. For example, a different story emerges when we focus on the patterns within the OECD countries. We found that within countries, all three measures of opportunity to learn—formal mathematics, applied mathematics, and word problems—had a statistically significant positive relationship to student performance.7 In other words, when students had more opportunities to study formal mathematics, applied mathematics, and word problems, their performance on PISA tended to increase, no matter in which country that student happened to live.

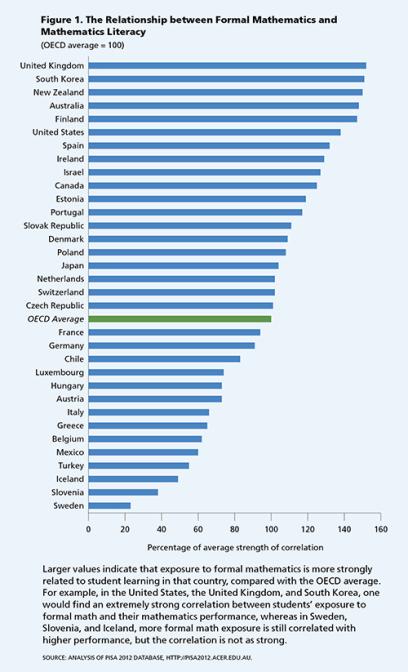

Exposure to word problems had a small positive association with PISA scores, while formal mathematics had a very strong positive relationship, with an estimated average effect size that was around half a standard deviation. For the United States, the relationship of formal mathematics to performance is particularly strong—around two-thirds of a standard deviation (see Figure 1 below). In short, PISA strongly suggests the importance of formal mathematics content.

(click image for larger view)

The effect of applied mathematics was more complicated. Applied mathematics was associated with higher performance up to a certain point, after which additional exposure to applied mathematics had a negative relationship. Generally among OECD countries, increasing from no exposure to moderate exposure was associated with a substantial increase in student performance (approaching half a standard deviation), beyond which there are limited gains or even drops in performance with more frequent exposure. In other words, after a certain point, more work in applying math actually is related to lower levels of mathematics literacy.

The small positive relationship of word problems and the positive (but more complicated) relationship of applied mathematics held for many of the PISA countries. However, the positive relationship of formal mathematics to student outcomes was far more powerful and much more consistent, holding in all education systems (OECD and non-OECD countries and regions that participated in PISA).

One reason for the stronger relationship of exposure with formal math might be that students need to be very comfortable with a mathematical concept before they can apply it in any meaningful way. For example, to calculate what percentage of one’s income is going to pay for housing or childcare, or any other major expense, a person must have a clear understanding of how proportions work. It appears that a thorough grounding in formal mathematics concepts is a prerequisite both to understanding and to using mathematics.

What all this implies is that while embedding mathematics content in word problems or in real-world contexts may improve students’ performance, it is the content of the mathematics instruction itself that is most crucial.

Variation in Opportunity to Learn

Earlier, we noted the great variation in mathematics performance within OECD countries. There is also tremendous variation in exposure to formal mathematics content (as shown in Table 1’s “Within-Country Variation in Formal Math” column), ranging from Belgium (41 percent above the OECD average variation) to Estonia (44 percent below the OECD average variation). As we explore in more detail below, the United States is 13 percent above the OECD average variation. PISA demonstrates quite convincingly that some countries do a much better job than others of making sure all of their students have roughly equal access to rigorous mathematics content, which includes formal mathematics.

This brings us to the problem of educational inequality. Education has traditionally been viewed as a way of establishing a “level playing field” among children from different backgrounds.8 The hope has been that access to good schools will ensure equality of opportunity, so that personal merit rather than family income will determine the course of one’s life. This vision has played a fundamental role in America’s self-understanding.

However, the results of PISA confirm a growing body of research indicating that the U.S. education system is not living up to the responsibilities we have placed upon it, not because students, parents, and teachers aren’t doing their best, but because the education system has not succeeded in ensuring equality of educational opportunity. Not only do student scores vary tremendously, but so too does exposure to formal mathematics content. Further, this is a problem everywhere, not just in the United States. And sadly, although some countries are better at more evenly distributing opportunities to learn math, none of them has managed to eliminate these inequities entirely.

Lower-Income Students Are Exposed to Weaker Content

These educational inequalities are in fact strongly associated with student socioeconomic status. Ideally, we would hope that low-income students would receive at least equal if not greater educational opportunities to catch up with their peers. Instead, in every PISA-participating country, poorer students received weaker mathematics content. School systems across the globe aren’t ameliorating background inequalities; they’re making them worse. Our analysis of PISA data suggests that exposure to formal mathematics is at least as important as student background in building student mathematics literacy. Theoretically, OTL could be used to mitigate the effects of student poverty; instead, we find the opposite.

The severity of educational inequality varies appreciably across countries, whether comparing variations in OTL or the influence of student socioeconomic status for different countries. There is also a big difference in how education systems, either by design or by consequence, contribute to these inequalities. For example, in some countries, the inequalities between schools are greater than others (Austria has more variation between schools than, say, Iceland). There are also substantial gaps in opportunity to learn between high- and low-income schools, with the smallest gaps among OECD systems in Estonia and the largest gaps in Austria. These inequalities are related to average country performance: systems with larger between-school (defined by high versus low student socioeconomic status) differences in OTL also have larger between-school differences in mathematics literacy.9

It is vital to remember that, in every country, most of the variation in educational opportunity is within schools, not between them. On average, about 80 percent of the variation in OTL among OECD countries is within schools (see Table 1). The fact that most of the inequalities in mathematics content are within schools suggests that attempts to reduce educational inequality that focus on high- and low-performing schools will have limited effects.

A More Detailed Look at the United States

Although by many metrics the United States is quite similar to other countries, there are a few areas in which it does stand out (and not for the better). The United States appears to have greater inequality in exposure to mathematics content than do other education systems. It has a 13 percent higher total variation in formal mathematics OTL than the OECD average—the 12th largest variation among the 33 OECD systems. As we might expect, the greater variation in OTL among U.S. students is associated with a higher-than-average relationship between exposure to formal mathematics and mathematical literacy (greater exposure increases math literacy), where the United States ranks sixth among OECD countries. Inequalities in mathematics instruction therefore play a somewhat larger role in accounting for educational inequality in the United States than in other nations.

What is most notable is the counterintuitive finding that the United States is characterized by lower between-school inequality than other countries. For years, the discussion of educational inequality and its association with student poverty has been concentrated on the problem of “failing schools,” the implication being that most of the inequities in the American education system are the product of differences between schools. This belief may have led some to suppose that the problems in the U.S. educational system are isolated, local failures, and not a failure of the U.S. education system as a whole. Although it is the case that U.S. students in schools with more-disadvantaged students are exposed to weaker mathematics content than students in more-affluent schools, this is a smaller problem in the United States than it is in other educational systems.

However, the PISA data reveal that the between-school inequality in student performance and student opportunity is dwarfed by within-school inequality. Three-quarters (76 percent) of the variation in student achievement is actually within school (compared with an OECD average of 64 percent), and 90 percent of the variation in opportunity to learn formal mathematics is within school (compared with an OECD average of 80 percent). These figures place the United States among the nations with the highest share of within-school inequality—seventh among OECD countries for OTL inequality and 10th for inequality in student outcomes.

Another feature of the United States that may differentiate it from other countries is the decentralized character of American schooling. While other nations have federal systems, the United States has long been noted for its extremely fragmented educational structure.10 This decentralization of educational structure has been accompanied by great variation in educational standards across states as well as major differences in the content of mathematics instruction across schools even in the same state.11

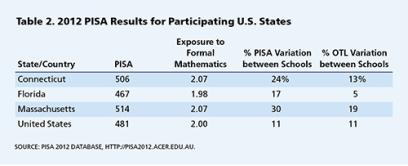

Over the last several decades, however, state governments assumed an ever-greater share of the responsibility for school finances, administration, and curriculum. The United States is not one large education system, but (at minimum) 50 autonomous ones. We know from the National Assessment of Educational Progress (NAEP), conducted by the U.S. Department of Education’s National Center for Education Statistics, that just as there is considerable variation across countries, there is also variation among U.S. states. One of the fruits of the most recent PISA is that three states—Connecticut, Florida, and Massachusetts—agreed to participate in the study with full statistical samples (rather than being lumped in with students from other states). This means we can treat them as independent systems (or “countries”) for purposes of comparing them to the U.S. average and to other countries’ education systems (see Table 2 below).

(click image for larger view)

What we find is that, while some interesting characteristics distinguish these states from one another and from other systems, they share most of the same general patterns we find in the rest of the world. Confirming the NAEP results, Massachusetts has higher average scores than the rest of the United States (514 vs. 481), although it is not among the very highest performers on PISA.

For all three states, most of the variation in student performance and opportunity to learn is within rather than between schools, and OTL is to a statistically significant degree related to student outcomes, even controlling for student socioeconomic background. In each of these three states, the same basic pattern emerges that we find in the United States as a whole and in other countries: inequalities in formal mathematics OTL exacerbate socioeconomic inequalities. In analyzing the differences between lower- and higher-income students (defined by the U.S. average), we found large gaps in both opportunity to learn and PISA scores, whether analyzed between schools, within schools, or a combination of the two.

Our analyses indicated that although within-school relationships are still more important, school-level factors play a much greater role in Massachusetts than in the other two states. For example, 30 percent of the variation in PISA scores and 19 percent of the variation in OTL were attributable to school-level factors. These findings are only suggestive but do point to some worthwhile avenues for exploration. For example, what is it about the Massachusetts educational system that gives schools greater importance? And what features of Florida’s educational governance have resulted in lower inequality?

In addition, we should exercise caution when comparing subnational units to national ones. At the moment, Shanghai is getting a great deal of attention due to its high PISA scores—but Shanghai is not the whole of China any more than Massachusetts represents the entire United States. Nor does it necessarily follow that states or cities have achieved their status because of educational practices or policies. For example, the close PISA scores of the Italian Lombardy region (517) and Massachusetts (514) may partly reflect their demographic similarities, in particular their relative wealth.

* * *

Careful analyses of PISA data can tell us a great deal more than which country is currently at the top of the international standings. Research based on PISA presents strong evidence that the United States systematically disadvantages lower-income students by depriving them of strong mathematics content, but it also tells us that this is a global phenomenon. In most respects, the United States is not that different from other countries.

PISA also includes some real surprises that should prompt us to re-examine our approach to education reform. Although it confirms the great importance of the content of instruction, PISA cautions us that with respect to the inclusion of real-world applications, more is not necessarily better. To that end, we must not overly concentrate on such applications at the expense of teaching mathematical content. It also calls into question the idea that tracking has decreased in American schools.

PISA does provide reason for optimism, however. The strong relationship between opportunity to learn and student outcomes suggests that schools really do matter. Some education systems are much more effective in minimizing educational inequality, a fact which, in the United States, should inspire admiration as well as a renewed commitment to the challenge of education reform in the service of quality and equality.

William H. Schmidt is a University Distinguished Professor, a codirector of the Education Policy Center, and the lead principal investigator of the Promoting Rigorous Outcomes in Mathematics and Science Education project at Michigan State University. He is a member of the National Academy of Education and a fellow of the American Educational Research Association. Nathan A. Burroughs is a research associate with the Center for the Study of Curriculum at Michigan State University. Parts of this article appeared in two posts on the Albert Shanker Institute’s blog: “PISA and TIMSS: A Distinction without a Difference?” on December 4, 2014; and “The Global Relationship between Classroom Content and Unequal Educational Outcomes” on July 29, 2014.

*See “Equality of Educational Opportunity” in the Winter 2010–2011 issue of American Educator and “Springing to Life” in the Spring 2013 issue. (back to the article)

†For more on what the United States can learn from Finland, Singapore, and Japan, see “A Model Lesson” in the Spring 2012 issue of American Educator, “Beyond Singapore’s Mathematics Textbooks” in the Winter 2009–2010 issue, and “Growing Together” in the Fall 2009 issue. (back to the article)

Endnotes

1. The Trends in International Math and Science Study (TIMSS) surveys student knowledge and exposure to mathematics content at the country, school, and classroom levels, but does not provide student-level information.

2. William H. Schmidt, Curtis C. McKnight, Richard T. Houang, HsingChi Wang, David E. Wiley, Leland S. Cogan, and Richard G. Wolfe, Why Schools Matter: A Cross-National Comparison of Curriculum and Learning (San Francisco: Jossey-Bass, 2001).

3. A description of the four content subscales can be found in Organization for Economic Cooperation and Development, PISA 2012 Results, vol. 1, What Students Know and Can Do: Student Performance in Mathematics, Reading and Science (Paris: OECD, 2014). Change and relationships: This category emphasizes “relationships among objects, and the mathematical processes associated with changes in those relationships” (95). Space and shape: This category emphasizes “spatial relationships among objects, and measurement and other geometric aspects of the spatial world” (103). Quantity: This category emphasizes “comparisons and calculations based on quantitative relationships and numeric properties of objects and phenomena” (105). Uncertainty and data: This category emphasizes “interpreting and working with data and with different data presentation forms, and problems involving probabilistic reasoning” (105).

4. William Schmidt and Curtis McKnight, Inequality for All: The Challenge of Unequal Opportunity in American Schools (New York: Teachers College Press, 2012).

5. Schmidt et al., Why Schools Matter.

6. From William H. Schmidt, Pablo Zoido, and Leland Cogan, “Schooling Matters: Opportunity to Learn in PISA 2012,” OECD Education Working Papers, no. 95 (Paris: OECD, 2013), 7: “Three indices were developed from the student questionnaire items: formal mathematics, an indicator of the extent of student exposure to algebra and geometry topics in classroom instruction; word problems, an indicator of the frequency that students encountered word problems in their mathematics schooling; and applied mathematics, an indicator of the frequency students in their mathematics classes encountered problems that required the application of mathematics either in a mathematical situation or in an every day, real world context.”

7. The within-country analyses were done with a hierarchical model at the between- and the within-school levels. The following types of relationships were found at both levels, but here we report on the within-school results where most of the variation in OTL and performance was present. When referring to the “average,” this relates to the OECD countries only.

8. Schmidt and McKnight, Inequality for All.

9. Schmidt, Zoido, and Cogan, “Schooling Matters.”

10. William H. Schmidt, Curtis C. McKnight, and Senta A. Raizen, A Splintered Vision: An Investigation of U.S. Science and Mathematics Education (Dordrecht, Netherlands: Kluwer Academic Publishers, 1997).

11. Schmidt and McKnight, Inequality for All.